Prof. Dr. Anselm Haselhoff

Vehicle Information Technology, Machine Learning, Computer Vision

Office: 01.208

Lab: 04.104

Phone: +49 208 88254-829

Mail: anselm.haselhoff@hs-ruhrwest.de

Anselm Haselhoff is a Professor for Vehicle Information Technology at the Computer Science Institute of Ruhr West University of Applied Sciences. He completed his Bachelor’s and Master’s degrees in Information Technology at the University of Wuppertal, with additional studies at Queensland University of Technology. Professor Haselhoff’s PhD research, supervised by Prof. Dr. Anton Kummert, focused on machine learning and computer vision for vehicle perception.

Currently leading the Trustworthy AI Laboratory at Ruhr West University of Applied Sciences, he has also had the opportunity to collaborate with leading researchers globally, including a stint as a visiting researcher at Sydney AI Center (Director Tongliang Liu) at the University of Sydney. His professional journey includes practical industry experience at Delphi Deutschland GmbH (now Aptiv PLC), where he contributed to advancements in computer vision for autonomous vehicles.

Professor Haselhoff’s research interests encompass information and signal processing, machine learning, deep learning, autonomous driving, and computer vision, specifically targeting areas such as:

Uncertainty Calibration and its Application to Object Detection

PhD Thesis – Fabian Küppers

The Gaussian Discriminant Variational Autoencoder (GdVAE): A Self-Explainable Model with Counterfactual Explanations

Visual counterfactual explanation (CF) methods modify image concepts, e.g., shape, to change a prediction to a predefined outcome while closely resembling the original query image. Unlike self-explainable models (SEMs) and heatmap techniques, they grant users the ability to examine hypothetical “what-if” scenarios. Previous CF methods either entail post-hoc training, limiting the balance between transparency and […]

Quantifying Local Model Validity using Active Learning

Machine learning models in real-world applications must often meet regulatory standards, requiring low approximation errors. Global metrics are too insensitive, and local validity checks are costly. This method learns model error to estimate local validity efficiently using active learning, requiring less data. It demonstrates better sensitivity to local validity changes and effective error modeling with […]

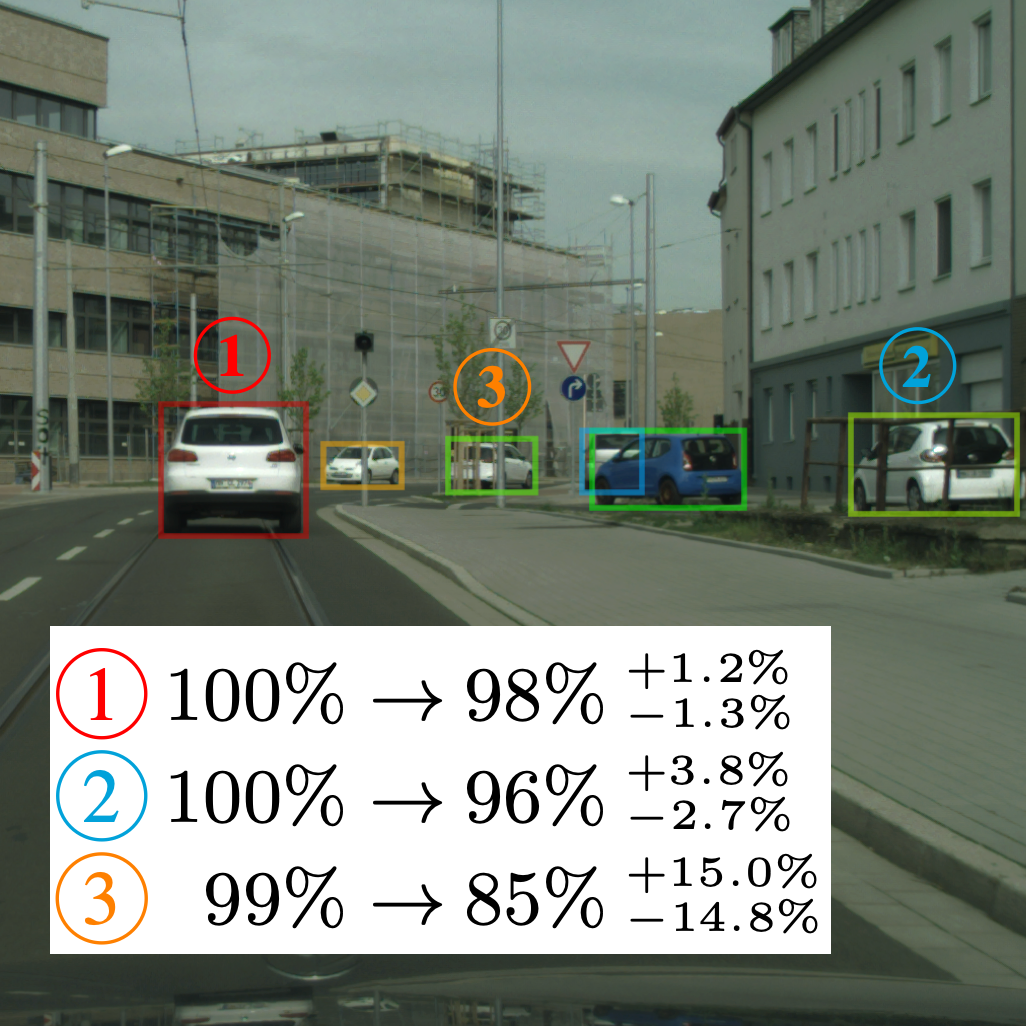

Parametric and Multivariate Uncertainty Calibration for Regression and Object Detection

We inspect the calibration properties of common detection networks and extend state-of-the-art recalibration methods. Our methods use a Gaussian process (GP) recalibration scheme that yields parametric distributions as output (e.g. Gaussian or Cauchy). The usage of GP recalibration allows for a local (conditional) uncertainty calibration by capturing dependencies between neighboring samples.

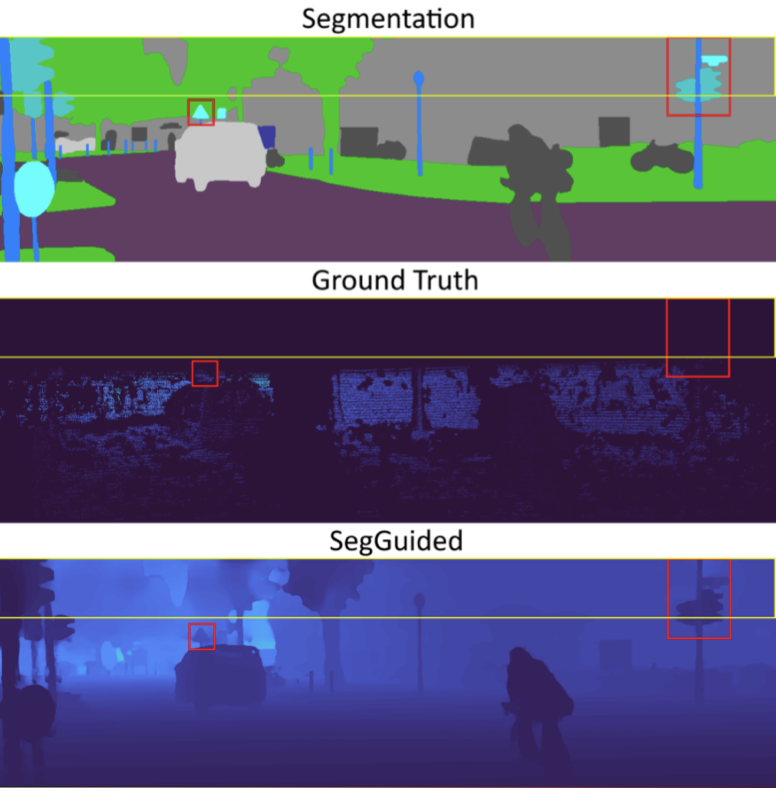

Segmentation-guided Domain Adaptation for Efficient Depth Completion

Complete depth information and efficient estimators have become vital ingredients in scene understanding for automated driving tasks. A major problem for LiDAR-based depth completion is the inefficient utilization of convolutions due to the lack of coherent information as provided by the sparse nature of uncorrelated LiDAR point clouds, which often leads to complex and resource-demanding […]

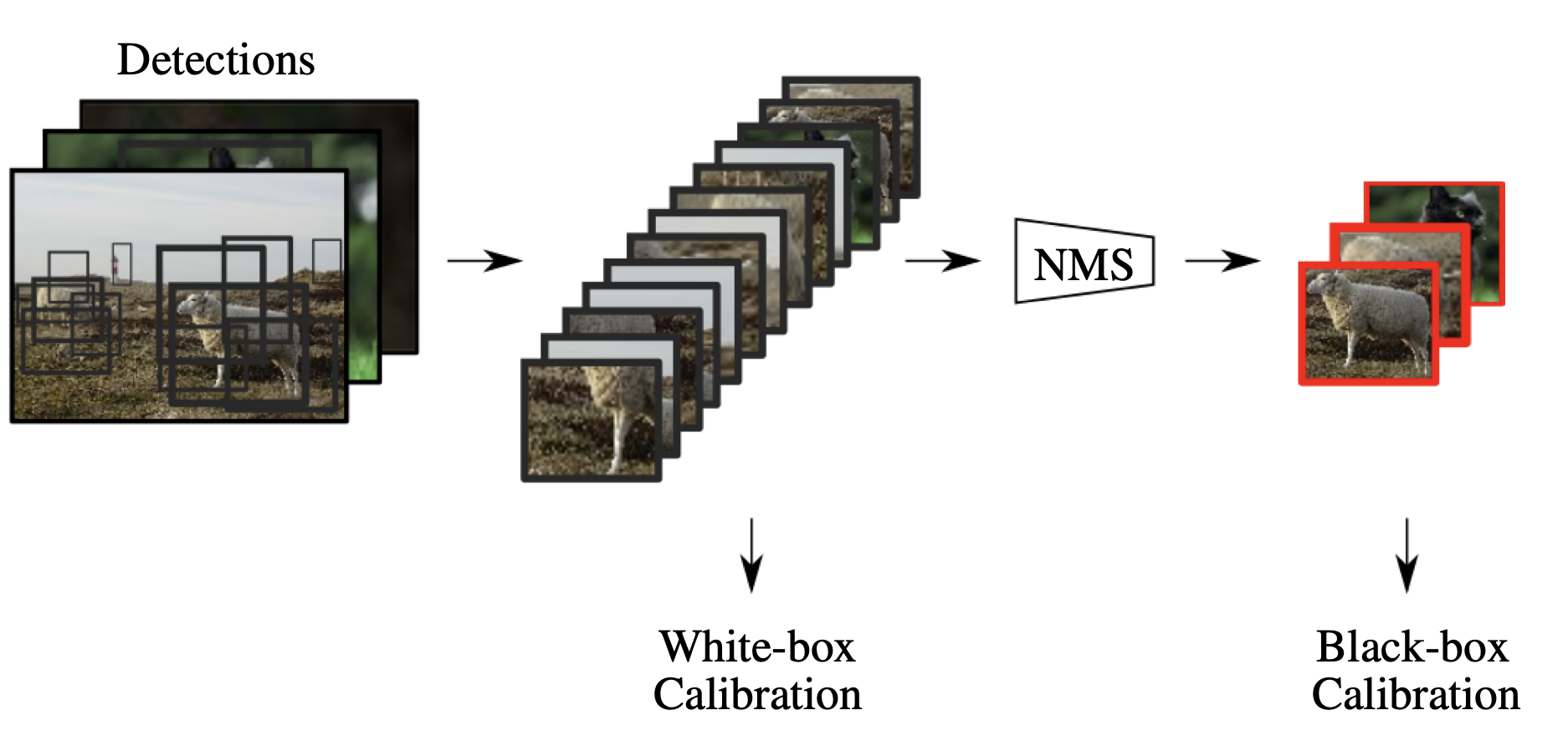

Confidence calibration for object detection and segmentation

Calibrated confidence estimates obtained from neural networks are crucial, particularly for safety-critical applications such as autonomous driving or medical image diagnosis. However, although the task of confidence calibration has been investigated on classification problems, thorough investigations on object detection and segmentation problems are still missing. Therefore, we focus on the investigation of confidence calibration for […]

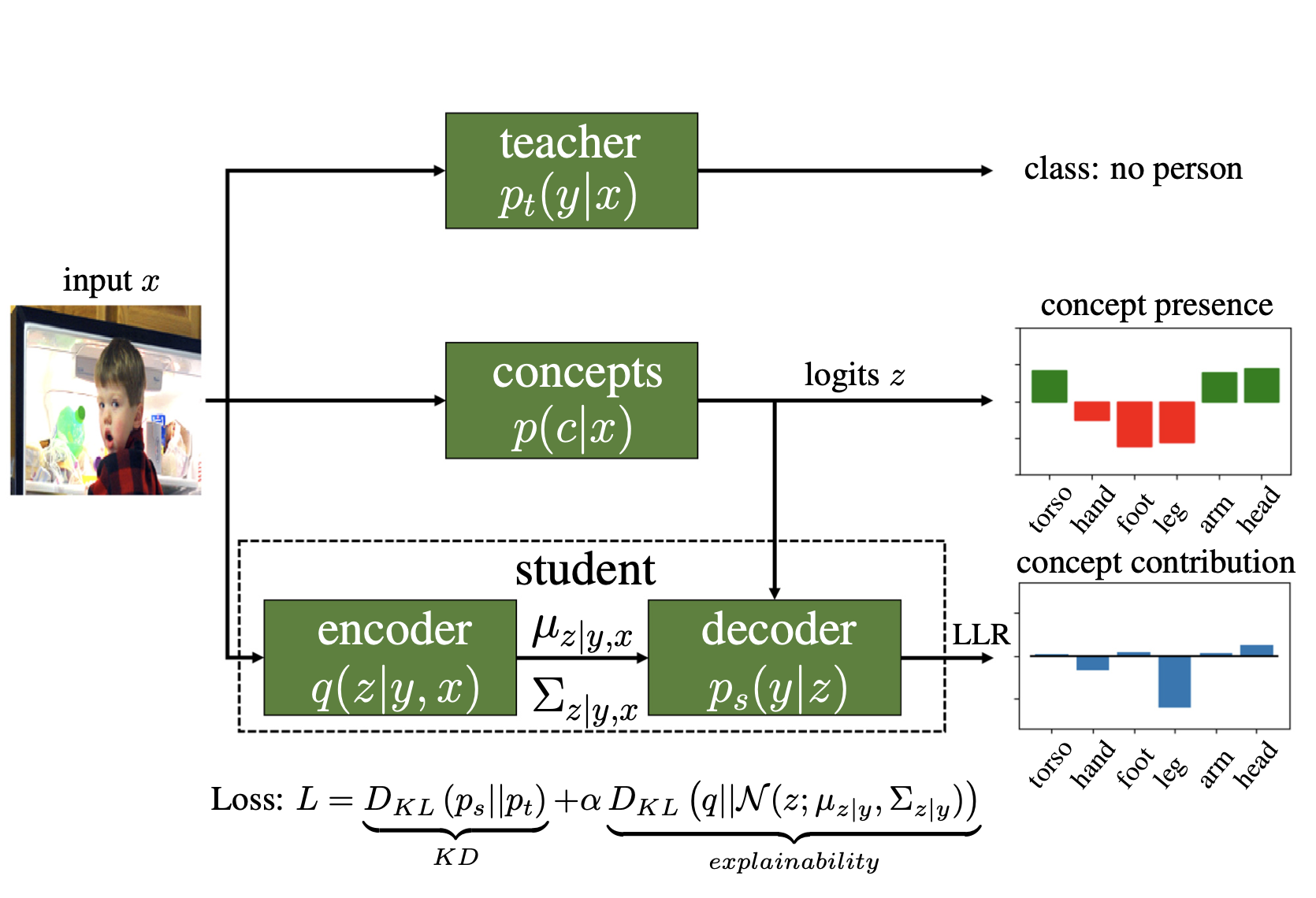

Towards Black-Box Explainability with Gaussian Discriminant Knowledge Distillation

In this paper, we propose a method for post-hoc ex- plainability of black-box models. The key component of the semantic and quantitative local explanation is a knowledge distillation (KD) process which is used to mimic the teacher’s behavior by means of an explainable generative model. Therefore, we introduce a Concept Probability Den- sity Encoder (CPDE) […]

Bayesian Confidence Calibration for Epistemic Uncertainty Modelling

Modern neural networks have found to be miscal- ibrated in terms of confidence calibration, i.e., their predicted confidence scores do not reflect the observed accuracy or precision. Recent work has introduced methods for post-hoc confidence calibration for classification as well as for object detection to address this issue. Especially in safety critical applications, it is […]

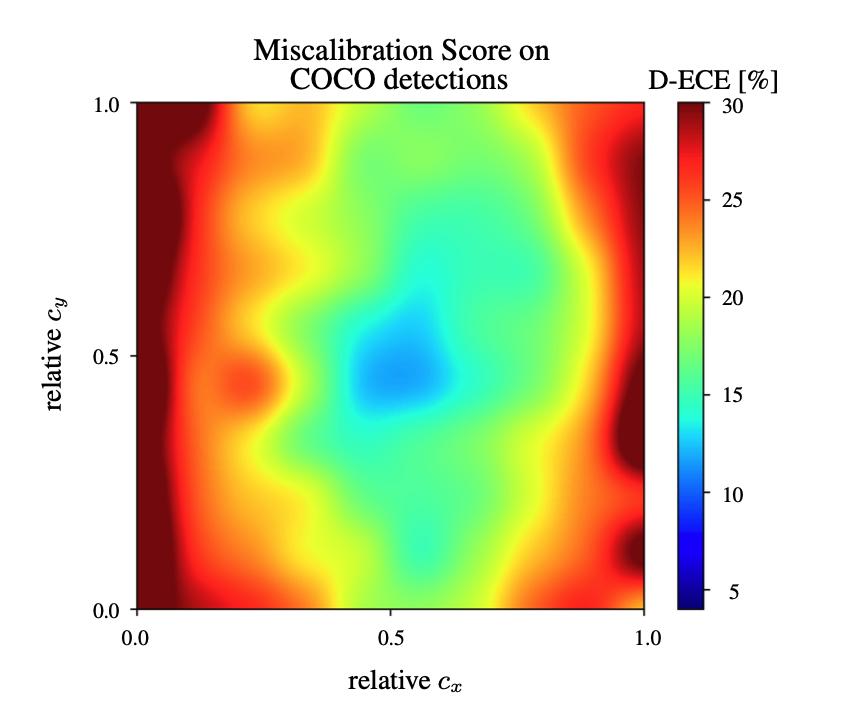

From Black-box to White-box: Examining Confidence Calibration under different Conditions

Confidence calibration is a major concern when applying artificial neural networks in safety-critical applications. Since most research in this area has focused on classification in the past, confidence calibration in the scope of object detection has gained more attention only recently. Based on previous work, we study the miscalibration of object detection models with respect […]

Multivariate Confidence Calibration for Object Detection

Unbiased confidence estimates of neural networks are crucial especially for safety-critical applications. Many methods have been developed to calibrate biased confidence estimates. Though there is a variety of methods for classification, the field of object detection has not been addressed yet. Therefore, we present a novel framework to measure and calibrate biased (or miscalibrated) confidence […]

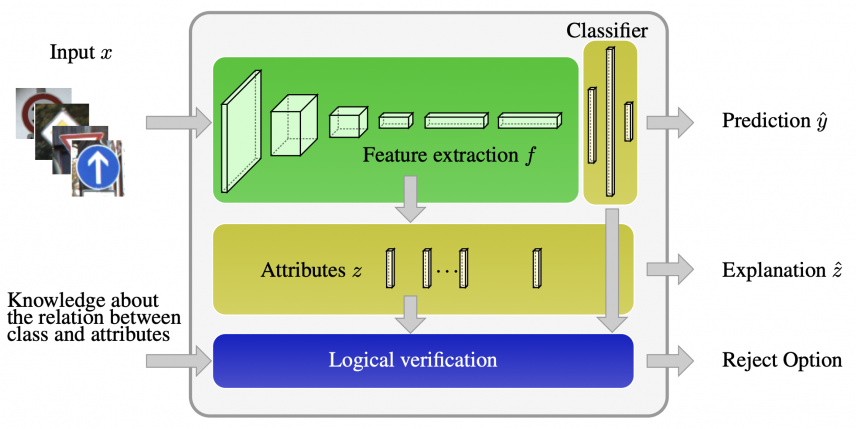

Dependency Decomposition and a Reject Option for Explainable Models

Deploying machine learning models in safety-related domains (e.g. autonomous driving, medical diagnosis) demands for approaches that are explainable, robust against adversarial attacks and aware of the model uncertainty. Recent deep learning models perform extremely well in various inference tasks, but the black-box nature of these approaches leads to a weakness regarding the three requirements mentioned […]

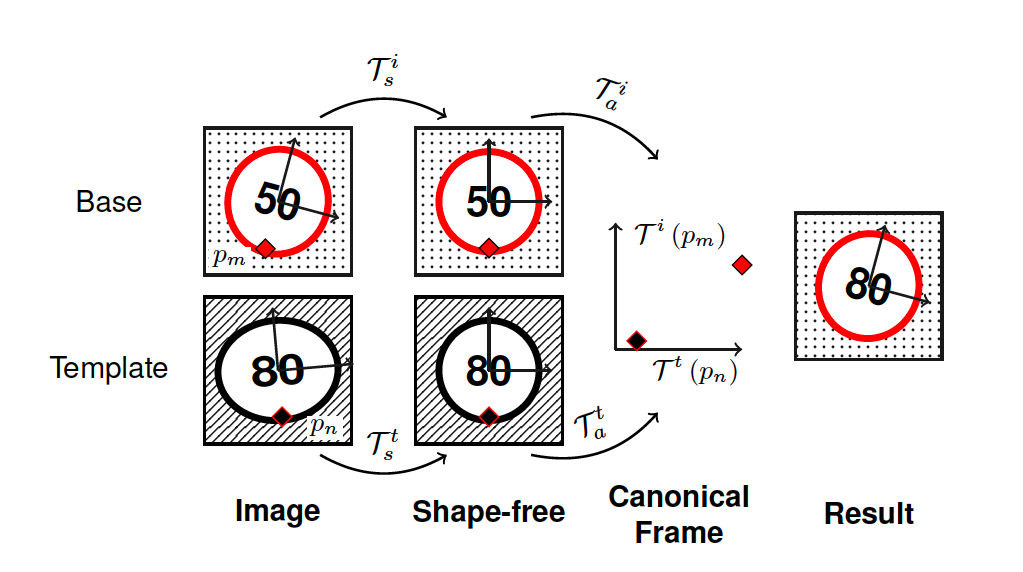

Markov random field for image synthesis with an application to traffic sign recognition

In current state-of-the-art systems for object detection and classification a huge amount of data is needed. Even if large databases are available, some classes are typically underrepresented and therefore the classifier is not able to capture the variability in appearance.In this work we present a novel method to enrich the training database with natural looking […]